Delta Lake vs. Apache Iceberg: Choosing the Ideal Table Format for Your Data Lake

Navigating the Battle of Table Formats: Which One Fits Your Data Lake Best?

In the world of modern data lakes, Delta Lake and Apache Iceberg are often at the forefront of discussions around table formats. Both provide essential features like transactional consistency, schema evolution, and efficient query performance. But their approaches differ, and understanding these distinctions is key to choosing the right one for your needs. Let’s explore these points in depth.

1. Origins and Ecosystem Influence

The origins of a technology often reveal its strengths:

Delta Lake, developed by Databricks and open-sourced in 2019 to address the complexities and reliability issues of traditional data lakes, has since evolved through substantial community contributions. Initially designed to unify batch and streaming operations, it became a natural fit for Spark-based workflows, offering consistency and simplicity for organizations heavily reliant on Apache Spark. Over time, Delta Lake has expanded its scope, gaining support beyond the Databricks ecosystem and solidifying its role in modern data architectures.

Apache Iceberg, developed by Netflix in 2017 to overcome Hive’s limitations in handling incremental processing and streaming data, was donated to the Apache Software Foundation in 2018, becoming an open-source project. Designed to manage complex, petabyte-scale data lakes, Iceberg has since become a cornerstone of modern data lake architectures, widely adopted across industries. Governed by the Apache Software Foundation, it is built to be compute-engine agnostic, making it compatible with diverse tools like Trino, Spark, and Flink.

2. Metadata Management

Efficient metadata handling is critical as data lakes grow, and this is where Apache Iceberg takes a unique approach:

Iceberg employs a distributed metadata model, which stores metadata independently of the compute engine. This allows for highly scalable operations, as query engines can access only the metadata they need without scanning unnecessary partitions or logs.

Delta Lake, in contrast, relies on a transaction log stored in object storage. While this is optimized for Spark queries, it may lead to bottlenecks when scaling beyond Spark-centric environments or querying massive datasets.

A Big plus for delta regarding the metadata is the table location:

Delta Lake employs relative paths in its transaction log, which simplifies internal file referencing and enhances portability within the same storage environment. By using relative paths, Delta Lake allows for seamless table operations—such as appends, updates, and schema changes—without needing to adjust file locations, making it easier to manage and move tables within a consistent storage framework.

Apache Iceberg, on the other hand, utilizes absolute paths in its manifests, ensuring precise and stable file references across different compute engines and storage locations. While this provides robustness for multi-engine access and consistent data handling, it introduces challenges when copying or relocating tables. For example, during replication, where a table’s state and history are duplicated to another data center or availability zone, or backup scenarios that involve archiving table states for recovery, the absolute file references must be updated to reflect the new storage locations. This requirement necessitates additional steps to modify file paths, adding complexity to table management and migration processes

you can read about this open issue.

3. Schema Evolution and Partitioning

Both formats support schema evolution, but Iceberg takes a step further:

Apache Iceberg provides a more flexible approach to both schema evolution and partitioning. Its metadata layer allows seamless column additions, deletions, and renames without rewriting underlying data files, which significantly reduces operational overhead. Partitioning is driven by transform functions (e.g., truncate, bucket, year) and can be evolved over time—such as switching from daily to monthly partitions—again without forcing expensive data rewrites. This “hidden partitioning” strategy helps maintain performance as data volumes grow and requirements change.

Delta Lake supports schema evolution but typically requires explicit actions—such as enabling spark.databricks.delta.schema.autoMerge.enabled or running with merge schema as shown:

df.write.option("mergeSchema", "true").mode("append").format("delta").save( "tmp/fun_people" )

— to accommodate changes without rewriting existing data. This controlled approach ensures data integrity but can introduce additional operational steps. For partitioning, Delta Lake uses user-defined columns, so you must carefully plan your partition strategy (e.g., by date or region) at table creation to maximize data skipping benefits. Adjusting partitions later often involves rewriting data, making initial design choices particularly important in growing datasets.

Imagine a scenario where your dataset includes dynamic partitions that frequently change. Iceberg’s hidden partitioning will automatically adapt, saving you from the hassle of manually updating configurations.

4. Engine Interoperability

One of Iceberg’s most significant advantages is its engine-agnostic design:

Apache Iceberg works seamlessly with tools like Spark, Trino, Presto, Flink, and Snowflake, allowing teams to use the best compute engine for their workload. This flexibility is invaluable for organizations operating in multi-cloud or hybrid environments.

Delta Lake has made strides to expand its compatibility but remains heavily optimized for Spark-based workflows. While it now works with Trino and Presto, its deepest integrations are still within Databricks.

For a team looking to leverage Trino for interactive queries, Flink for streaming, and Snowflake for warehousing, Iceberg offers unmatched flexibility.

5. Community and Governance

The governance models of these technologies impact their innovation and adoption:

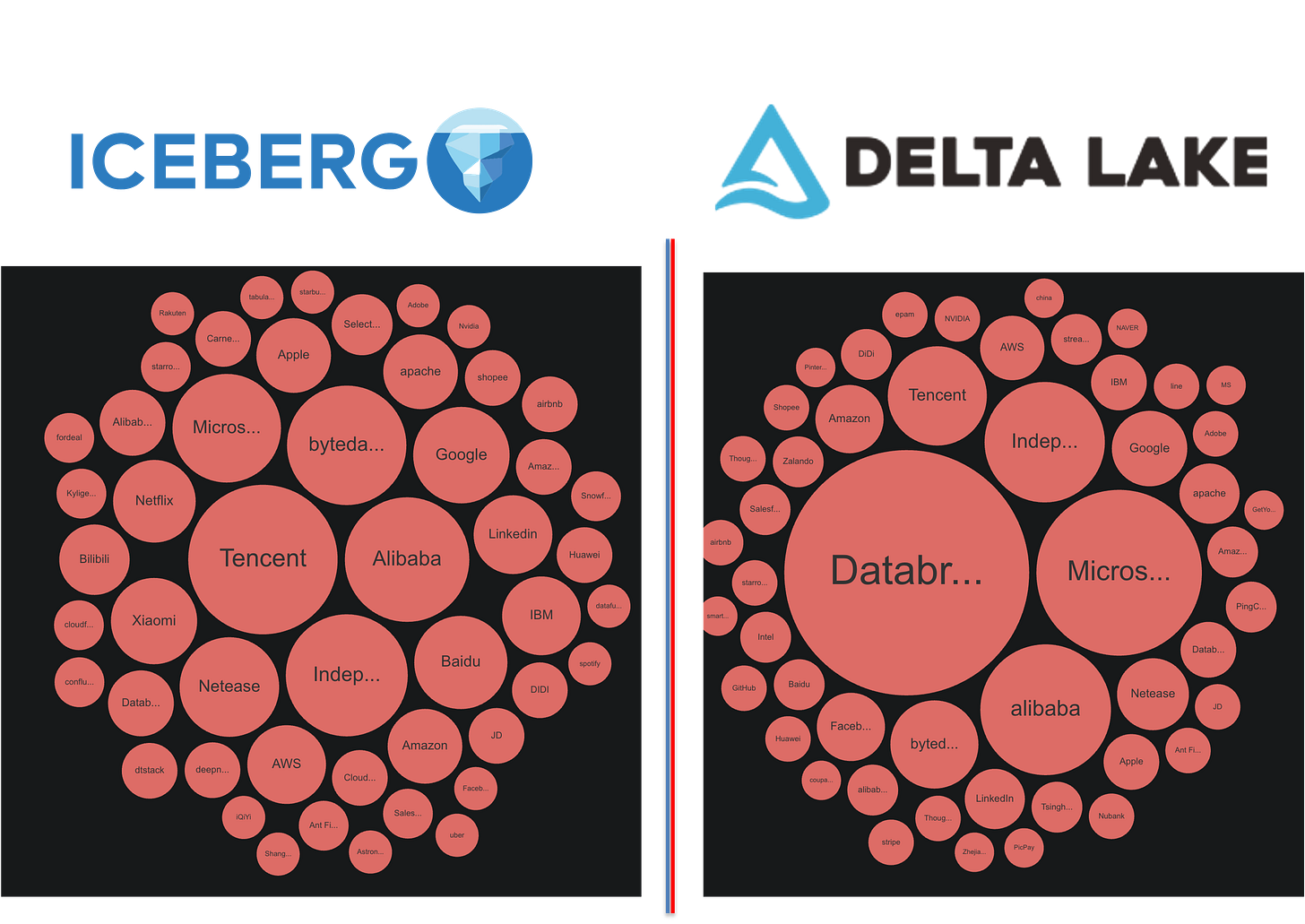

Apache Iceberg benefits from Apache governance, which ensures vendor neutrality and encourages contributions from a diverse set of organizations, including Netflix, Apple, and Tabular. This collaborative approach leads to rapid innovation and adoption across industries.

Delta Lake was initially proprietary but has since open-sourced its core under the Linux Foundation. While this has broadened its adoption, its community is still more centralized around Databricks.

If vendor neutrality and an active open-source community are priorities, Iceberg is the clear choice.

6. Real-World Use Cases

Delta Lake excels when tightly integrated with Spark, offering seamless streaming ingestion, real-time analytics, and robust batch ETL through features like MERGE INTO for upserts. It employs a transaction log to provide time travel and data auditing, allowing users to pinpoint table versions for debugging or compliance checks. While primarily Spark-centric, Delta supports connectors for other engines, though its strongest optimizations are in the Databricks environment. With file-level statistics for query pruning and strict schema enforcement, it caters well to enterprise data governance needs. Incremental CDC patterns are straightforward, and its proven performance in large-scale historical analytics makes it a trusted choice for Spark-driven data lakes.

Apache Iceberg stands out for its engine-agnostic design and flexible metadata layer, offering smooth schema evolution (renaming, adding, or dropping columns without rewriting files) and hidden partitioning that adapts as data grows. Iceberg supports streaming writes across multiple engines, providing snapshot isolation for consistent views and enabling advanced metadata capabilities through Puffin—particularly helpful for approximations and secondary indexes. Its manifest files store fine-grained column statistics for efficient file pruning, and time travel is handled via distinct snapshots, making audits or historical queries straightforward. Iceberg’s broad support from various vendors and open-spec approach suits organizations seeking multi-engine interoperability and modern lakehouse features.

7. Advanced statistics - Puffin files

Puffin Files in Apache Iceberg: Enhancing Metadata for Smarter Query Planning

Puffin is a new file format introduced by the Apache Iceberg community, designed to hold advanced metadata like statistics and indexes to optimize query performance. While Parquet and Iceberg manifest files already provide valuable metadata for query planning, Puffin offers additional benefits by enabling incremental metadata storage for tasks like calculating distinct value counts (NDV), creating secondary indexes, and more.

Why Puffin?

Unlike Parquet or Avro, which are not suited for storing large, complex metadata like sketches, Puffin provides a flexible structure to store these large “blobs” of information. These blobs, such as Apache DataSketches, enable efficient approximations for operations like distinct counts, quantiles, and median calculations. This can significantly reduce the computational overhead and time required for query optimization, especially for resource-intensive tasks like join reordering or approximate aggregations.

Current and Future Use Cases

• NDV Calculation: Puffin enables efficient storage and incremental updates of approximate distinct value counts, helping query engines plan smarter without rescanning entire tables.

• Secondary Indexes: Future use cases include storing large indexes like bloom filters, improving data pruning for massive datasets.

Puffin is a step toward enabling better interoperability among query engines by providing a standardized, extensible format for storing advanced statistics and indexes. While still in its early stages, it lays the groundwork for more efficient query planning and faster insights in Apache Iceberg.

Delta Lake lack of support for Puffin-like capability means users with complex analytical workloads (e.g., distinct count estimations, approximate joins, or heavy aggregations) might face additional overhead when generating or storing approximations.

If you still Don’t believe me - check out this linkedin post Puffin Benchmark

8. Looking Ahead: Innovation in Table Formats

Both formats are actively evolving:

Delta Lake is expanding its capabilities with new APIs and better interoperability. Its tight coupling with Databricks ensures it remains a powerful option for Spark users.

Apache Iceberg is pushing boundaries with features like puffin files and file-level metadata optimization, which reduce operational overhead and improve performance at scale.

The competition between Delta Lake and Iceberg is driving innovation, benefiting the entire data lake ecosystem.

Conclusion

Both Delta Lake and Apache Iceberg exemplify the next generation of data lake table formats, offering ACID transactions, scalable metadata management, and improved query efficiency. Your choice ultimately hinges on the engines, ecosystems, and workloads that matter most to your organization. If you’re deeply invested in Spark-based pipelines and Databricks, Delta Lake’s transaction log, built-in streaming support, and robust ecosystem may serve you best. On the other hand, if you anticipate working across multiple engines—or need the seamless schema evolution, hidden partitioning, and advanced metadata indexing that Puffin provides—Apache Iceberg offers a more flexible, open-spec solution. Whichever format you choose, the competition between these two is driving continuous innovation, making modern data lakes more powerful, efficient, and adaptable than ever before.