Cracking the Ice: The Battle Between Sort and Binpack in Apache Iceberg

Unlocking performance vs. optimizing storage — choosing the right compaction strategy for your data lake.

Apache Iceberg has become a popular table format for modern data lakes due to its efficient metadata handling, schema evolution capabilities, and support across multiple query engines. However, maintaining optimal performance and cost efficiency for Iceberg tables requires regular compaction. Two primary strategies are often debated: Sort and Binpack. Let's dive into each method and understand their strengths and trade-offs.

Understanding the Small Files Problem

When dealing with data lakes and modern distributed data storage, the way your data is organized into files significantly impacts performance. Query engines must open, read, and close every file containing relevant data. In real-time or streaming scenarios, frequent ingestion creates numerous small files, each containing minimal data. This leads to two primary issues:

High file I/O overhead: More files mean more operations, which slows down queries and consumes compute resources.

Metadata explosion: Each file adds metadata. A large number of small files leads to bloated metadata, slowing query planning.

Compaction solves these problems by merging small files into fewer, larger files — reducing I/O and metadata overhead.

Binpack Compaction

Binpack compaction focuses on efficiently packing smaller data files into optimally sized larger files without necessarily sorting the data. The goal is primarily storage optimization and metadata simplification.

Pros:

Faster compaction process with lower CPU and memory overhead.

Excellent for general storage optimization and metadata management.

Ideal for workloads where query patterns are highly unpredictable.

Cons:

Minimal improvements to query performance compared to sorted data.

Potentially increased I/O for selective queries.

Sort Compaction

Sort compaction involves reading data files, sorting them based on specified columns, and writing the sorted data back into fewer, larger files. This process significantly enhances query performance, particularly for range-based queries, since data that is queried together is physically stored closer.

Pros:

Improved query performance due to locality and sorted order.

Reduced I/O operations for range and filter queries.

Easier predicate pushdown, leading to less data scanned per query.

Cons:

Higher CPU and memory consumption during compaction.

Longer execution time, especially with large datasets.

Sort vs. Binpack: Why Your Choice Matters

Absolutely. In the world of big data, sorting isn’t just an organizational preference—it’s a performance multiplier. Whether you're chasing faster queries or lower storage costs, how you lay out your data plays a critical role. Our latest benchmarking using the TPC-H 3TB dataset shows just how dramatic the difference can be. From cutting scan sizes by half to squeezing more efficiency out of compression algorithms, sorted data gives Iceberg tables a decisive edge. Let’s unpack how.

Improved Query Performance through Sorting

Benchmarking Iceberg tables using the TPC-H 3TB dataset reveals a significant performance advantage when data is sorted. The chart clearly demonstrates that unsorted tables consistently require more data scans, ultimately resulting in a cumulative 51% increase in data scanned across typical analytical queries. By sorting data during compaction, queries benefit from enhanced data locality and improved predicate pushdown efficiency, leading to reduced I/O overhead and substantial query acceleration. This outcome emphasizes the tangible benefits of sorting strategies for analytical workloads, dramatically reducing the amount of data query engines must process.

Enhanced Storage Efficiency with Sorted Data

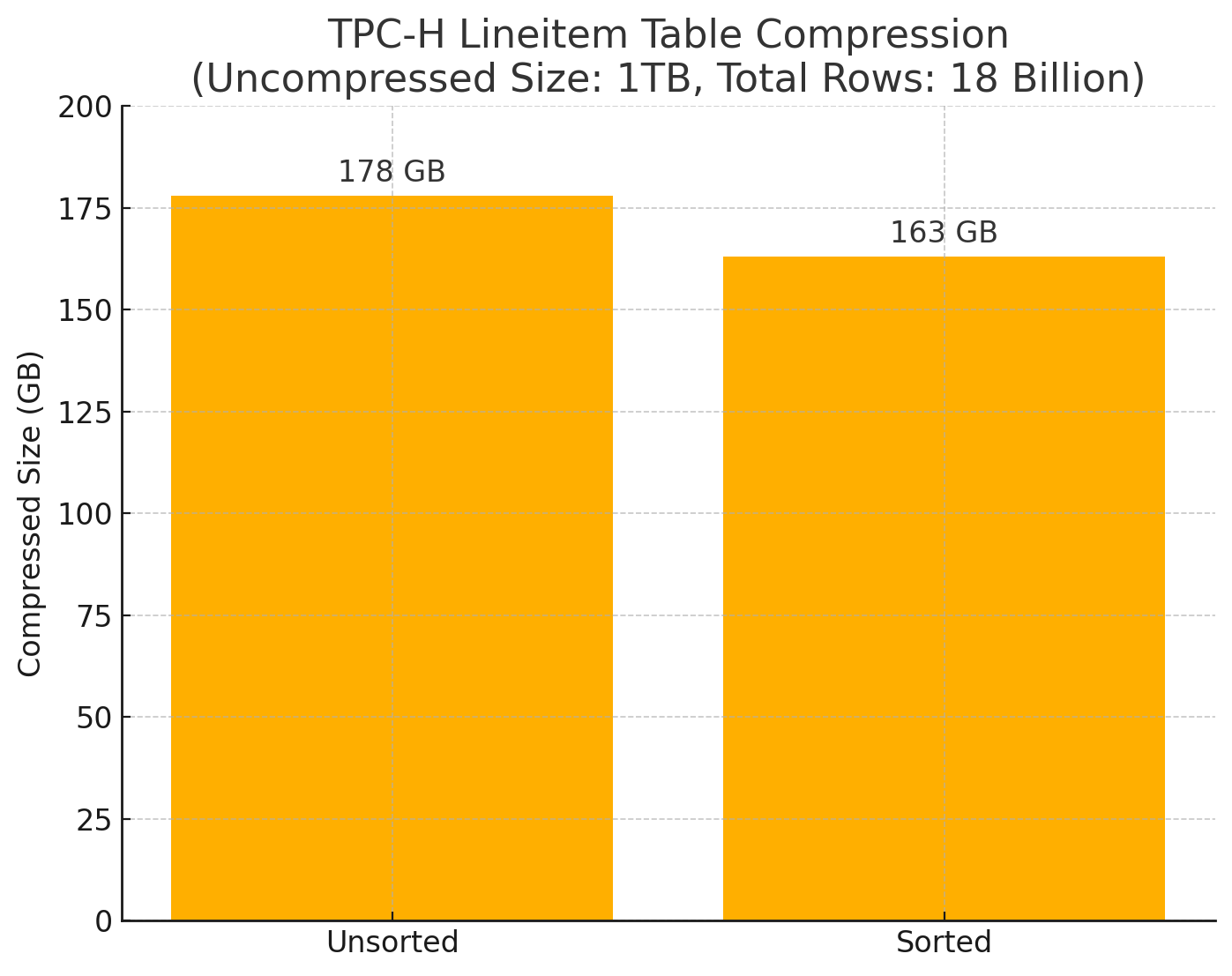

Sorting data not only improves query performance but also results in notable compression gains. Using the TPC-H Lineitem table as a reference—consisting of 18 billion rows totaling roughly 1TB of uncompressed data—the comparison demonstrates that sorted data achieves approximately 9% better compression compared to unsorted data (163GB versus 178GB, respectively). This improvement arises because sorting increases data similarity within each file, which compression algorithms exploit effectively. Thus, sorting yields dual benefits: reduced storage costs and improved query speed, making it a compelling choice for optimizing Iceberg tables.

Choosing Between Sort and Binpack

The choice between sort and binpack compaction largely depends on your specific workload and priorities:

If query performance is critical, especially with predictable query patterns or frequent range queries, sorting provides significant benefits.

If operational cost and speed of compaction are priorities, binpack offers quick wins with lower resource usage.

A Hybrid Approach

Some teams find the most efficient solution by adopting a hybrid strategy:

Regular binpack compactions to manage file growth and metadata.

Periodic sort compactions on specific datasets or partitions heavily used in range queries.

This balanced approach often yields the best results, optimizing both cost and performance.

Introducing Rust Compactor for Apache Iceberg

Recently, we've developed a high-performance Rust-based compactor specifically designed to enhance Apache Iceberg table compaction efficiency. Our benchmarks clearly demonstrate the benefits: for a data set of approximately 200GB compressed (around 1TB uncompressed), traditional Apache Spark compaction took around 1,612 seconds at a cost of $1.54. In contrast, our Rust-based compactor dramatically reduced this duration to just 221 seconds, cutting down costs to $0.21, which translates to a significant time and cost saving of approximately 86%. Even when performing the more intensive sorting compaction, the Rust compactor maintained remarkable efficiency, finishing in only 780 seconds with a cost of $0.74—still about half the time and cost of Spark's binpack method.

These improvements in compaction performance are due to Rust’s inherent advantages, including lower overhead, better memory safety, and superior optimization for high-throughput workloads. The result is a compaction process that not only greatly reduces runtime but also substantially lowers infrastructure expenses.

See full benchmark results here

Conclusion

Apache Iceberg offers robust strategies—Binpack , Sort & Z-order —to compact data lakes effectively. While Binpack is cost-effective and faster, Sort delivers superior query performance but has traditionally been resource-intensive and slower. However, our innovative Rust-based compactor changes this paradigm by drastically reducing both the time and cost associated with Sort compaction. Given that sorting now approaches the efficiency previously reserved for Binpack, the distinction between these strategies is blurring. Could sorting soon become the default standard for Iceberg compaction, eliminating the need to compromise between speed, cost, and query performance altogether?

If you’re looking for a managed solution that handles Iceberg table maintenance seamlessly—boosting performance and reducing costs without any hassle—check out Lakesphere.